To manage the limited space in a cache, items are discarded through a process known as cache eviction. In this article, we’ll explore different cache eviction policies through a fun and relatable analogy of a coffee shop. By the end, you’ll understand:

- Why Caching Matters

- What to Cache

- The Principle of Locality

- Cache Thrashing

- Cache Eviction Policies

- LFU

- LRU

- Segmented LRU

- Coffee shop simulation

- Redis & .NET Implementation

💡 Why caching?

Caching is a critical component in any high-performance application. At its core, caching involves storing frequently accessed data closer to where it’s needed. While traditional databases and storage systems are durable, constant calls to them slow down your application. By caching, we store high-usage data in faster, more accessible storage.

But how critical is performance? Consider this: in a 2006 Amazon study, a 100-millisecond delay in page load time was found to reduce sales by 1%. That’s a staggering potential loss of $1.6 billion. Fast-forward to today, and user expectations for speed are even higher. The stakes? Even larger. (Read more about this study here).

❓What should you cache?

Caching improves performance, but it comes with a cost. Cache storage is expensive and limited. The question becomes: what data should you cache?

Here’s where Pareto’s Law (the 80/20 rule) comes into play.

80% of consequences come from 20% of causes.

Applying this to caching means that caching the most important 20% of your data could provide substantial performance improvements. However, this is just an approximation, and the actual ratio will vary based on your use case.

🪧 Principle of Locality

Understanding what to cache is driven by the Principle of Locality, which predicts what data is likely to be needed again.

- Temporal Locality: If data is accessed now, it’s likely to be accessed again soon. Think of a viral Twitter post—once it gains traction, it will be repeatedly viewed. Caching it makes sense.

- Spatial Locality: When one piece of data is accessed, related data is likely to follow. Imagine you’re browsing Amazon; you view a product and are immediately shown recommendations. Caching related products based on what users view fits here.

- Statistical Locality: Over time, patterns emerge in data access. For example, an item that has been frequently ordered in an e-commerce platform is a good candidate for caching.

The principle of locality determines which items survive in the cache and which items are evicted when the cache memory is full.

⚔️ Cache Thrashing

In caching, when an item is requested and successfully found in the cache, it’s known as a cache hit. Cache hits improve performance by quickly serving data from memory without needing to access the underlying database. Conversely, when the requested item is not found in the cache, it results in a cache miss, requiring the system to fetch the data from the slower, original source.

To manage the limited space in a cache, items are discarded through a process known as cache eviction. However, when cache misses happen too frequently and the same items are constantly evicted and reloaded, a phenomenon known as cache thrashing occurs.

Cache thrashing can have a severe impact on application performance, causing delays and increasing the load on your system. For example, imagine a Twitter post going viral. If a basic TTL (Time to Live) strategy is used as the cache policy for the post, once the TTL expires, the post is evicted from the cache—even though it’s still in high demand. This causes frequent cache misses, as the post is repeatedly fetched from the database and reloaded into the cache, over and over again, straining system resources. So, it is essential to select an appropriate eviction technique based on the use case.

📤 Cache Eviction Techniques

Now, let’s dive into three major cache eviction techniques, using our coffee shop analogy to explain each one.

🔢 Least Frequently Used (LFU)

Imagine you run a coffee shop with five tables. All tables are occupied, and a new customer arrives. You need to free up a table—whom do you ask to leave?

With LFU, you ask the customer who visits least frequently to leave. This works for certain scenarios, like an e-commerce store where long-term popular items (even if not recently ordered) should be kept in cache. However, this method isn’t always perfect—your least frequent visitor today may become a regular tomorrow.

⌚ Least Recently Used (LRU)

In the same coffee shop scenario, instead of looking at who visits least frequently, you consider how long it’s been since someone last visited. The person who hasn’t visited in the longest time gets asked to leave.

This ties to temporal locality—data accessed more recently is more likely to be accessed again soon. This method works great for scenarios like social media, where posts that gain traction are repeatedly accessed in a short time frame.

🧮 Segmented LRU

To get the best of both worlds, you can divide your coffee shop into two sections:

- New Customers (recent visitors) ⌚

- Loyal Customers (frequent visitors) 🔢

When a new customer arrives, they are seated in the “New Customers” section. After visiting a few more times, they are promoted to the “Loyal Customers” section, based on how frequently they visit. When space is needed, we first evict people from the “New Customers” section, prioritizing those who have been there the longest, i.e., based on recency. If a new customer is promoted to the “Loyal Customers” section but there’s no space, a loyal customer is demoted back to the “New Customers” section, again based on recency. If space is still required, the demoted customers will eventually be evicted. It’s important to note that promotion is based on frequency, while both eviction and demotion are based on recency.

Segmented LRU blends recency and frequency by storing “hot” (frequently accessed) and “cold” (recently accessed) data separately, evicting cold data first but promoting frequently accessed data as needed. The below diagram represents the workflow for segmented LRU.

🍵 Coffee Shop Simulation- Segmented LRU

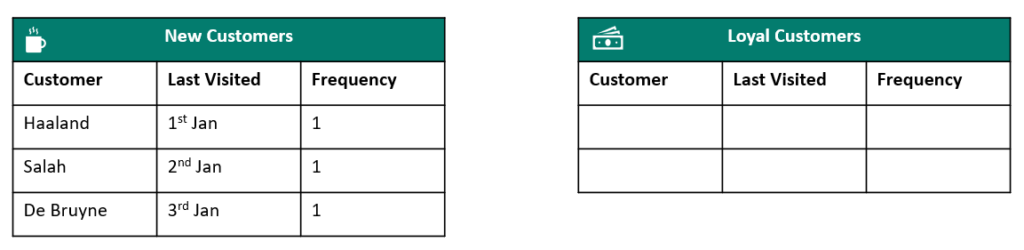

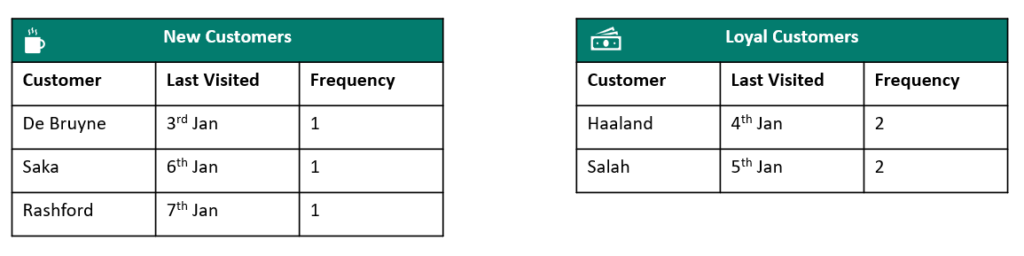

Let’s try to simulate segmented LRU workflow. We will make few assumptions for the simulation.

New customers capacity: 3

Loyal Customers Capacity: 2

Threshold for Promotion: 1

Step 1: We will consider below is the initial status. “New Customers” section is full at the moment, but no one is yet promoted to “Loyal Customers”.

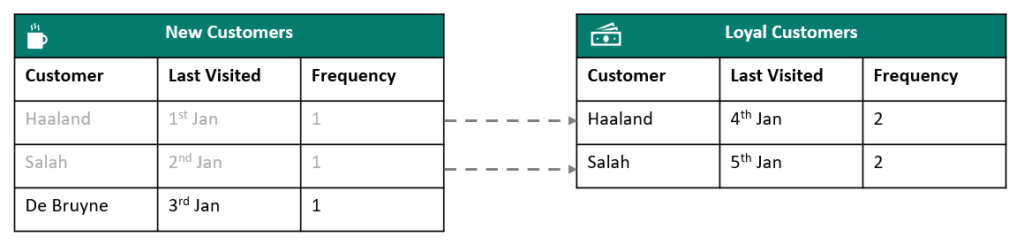

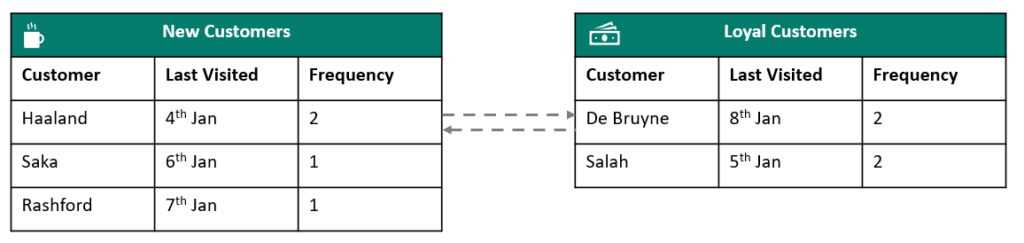

Step 2: Now Haaland & De Bruyne visit again. They have crossed the threshold for promotion so both are promoted to “Loyal Customers”. 🔥

Step 3: Now, Saka & Rashford visit once each, filling up the new customers section. So we have both the sections full at the moment. 💯

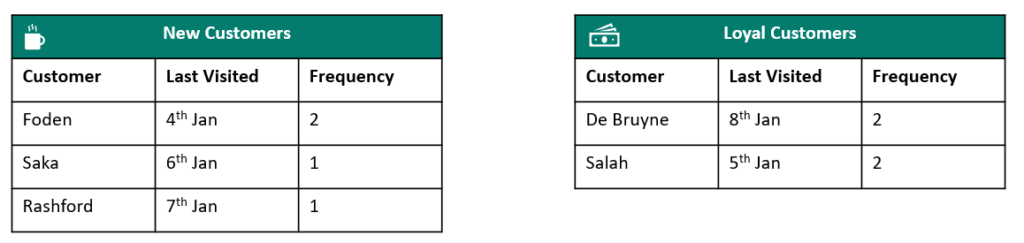

Step 4: At this point, De Bruyne visits again, and things get interesting. De Bruyne is now eligible for promotion to the “Loyal Customers” section, but there’s no space left. To make room, we need to evict someone from the “Loyal Customers” section. Based on recency, Haaland is chosen for eviction. However, Haaland isn’t completely removed from the system—he gets demoted back to the “New Customers” section instead.

Step 5: Finally, let’s say Foden comes in to have a coffee. Based on recency we have to let our dearest Haaland go to make way for Foden in the “New Customers” section. 😭

🚀 Redis & .NET implementation

With the above simulation we have covered the full cycle of an item in a segmented LRU workflow. Redis is one of the most popular caching databases in recent times. However, Redis doesn’t natively support Segmented LRU, so it requires custom implementation at the application level. I’ve developed a solution using .NET Core and Redis, to cover the end-end simulation. I’ve detailed the implementation here. Feel free to explore it!

You can also check out my project on GitHub through this link.

💹 Conclusion

Through this coffee shop analogy, we’ve covered key cache eviction techniques, each with its strengths and trade-offs. By connecting real-world examples to technical concepts, I find them easier to apply. Hope this helps you too.